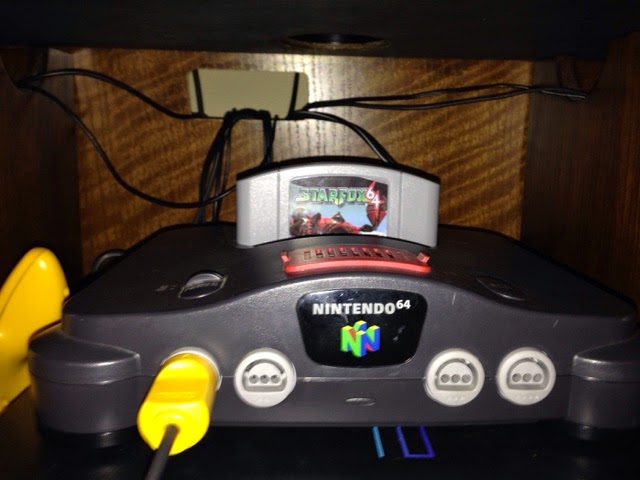

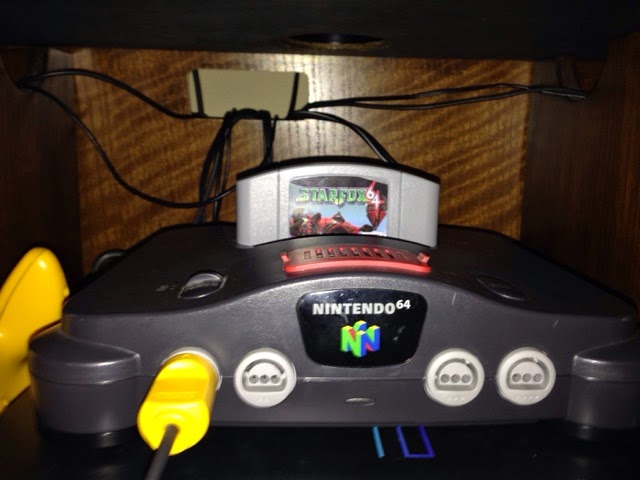

My son asks, “Hey Dad, why is it called the Nintendo 64?”

To which I reply, “Because it came out in 1964!” being a smart ass.

At first he accepts this answer, but about 5 seconds later he bounces back with, “No it didn’t, what’s the real reason?”

Essentially, this line of questioning opened a door on computer and video game history that would take a great deal of explaining. To be honest unless you lived back then the whole 64-bit thing is lost on you, after all we live in a world of where consoles “bits” no longer matter. This is the era of the PS4, and XBOX One, not the XBOX 512 or the PlayStation 512. Now days it’s more about what the console can do besides playing video games, rather they how they play or look. So how do you explain the relevance of the “64”, in Nintendo 64, to someone whose favorite console is an XBOX 360?

Of course I sat him down and talked to him about the various systems dating back to the Atari 2600, and the generations of consoles. He found it hard to believe that both the Atari 2600 and NES are 8-bit, (sometimes I find it a little hard to believe that myself) but they are. Having played games on the Atari 2600, NES, SNES, N64, GameCube, and the Wii (a system of the same generation as his XBOX 360) he was familiar with how the games looked and played. This made it easy for me to explain how each system got better as processing speeds doubled, except for the odd 32-bit thing. He seemed to understand, but in a way it was somewhat irrelevant to someone of his generation. But, then he asked a question to something we as retro gamers often overlook, and that I would like to talk about now, “Why and how did each generation double in speed?”.

We as retro gamers look back and laugh at things like the “Bit Wars”, or how Intellivison claimed it was 16-bits, and the Atari Jaguar claimed it was 64. We love our 8-bit, and 16-bit systems, but we never really give much thought to the engineering and science behind it. What we as gamers have overlooked is a little something called “Moore’s Law”.

The law developed by computer scientist Gordon Moore states that every 18-24 months, the size of transistors decreases by 50%, and processing speeds double. So what does that mean? Well computers, and even video game consoles, are made up of the same basic parts that every computer system has, these parts consist of microprocessors (the computers brain), RAM (the computer systems short term and first line of memory) and the GUI subsystems such as the graphics cards (how the computer graphically shows things). The basic concept behind Moore’s Law is that every 2 years or less the most basic component of these parts, the transistors, get smaller meaning more of them can fit on a tiny chip, making that chip more powerful. This means twice as much processing power and memory, in the same small space.

If we take ourselves back to the PC’s of the mid to late 1990’s, most of us can remember this law being in motion. Intel seemed to make leaps and bounds from the 33MHz 386’s they had in 1990 to the 1+GHz Pentiums in would have by 2000. At times it seemed as if buying a computer back then was almost futile, since the computer would be virtually obsolete by the time you got it home from the store.

These same obvious advances in 1990’s PC’s, have been paralleled in video game consoles over the years. Yes, the Atari 2600 and NES both processed at 8-Bit speeds, but late Atari 2600 cartridges topped out at 16kB of memory, with the average being about 4kB. The NES on the other hand averaged about 4MB of memory, meaning more complex programs could be written stored and executed on the cart. Not to mention that the NES’s system RAM was far higher at 256 bytes compared to than that of the Atari 2600 at 128 bytes. Here we saw what 6 to 8 years could do between these two systems, with regards to Moore’s Law. Lest we not forget of course, that the NES spawned a video game crazy and we quickly went from 8 to 16, to 32 to 64-bit and so on, as every few years systems doubled in processing speeds.

Here we see Moore’s Law taking place as console manufactures found ways of integrating the faster processors in a constant battle of one-upsmanship. So my sons initial question gets answered “Why is it called the Nintendo 64?”, because processing speeds for video games had reached 64-bits and Nintendo wanted the world to know they had 64-bit system with the simple moniker “Nintendo 64”. Moore’s law had led to the doubling and re-doubling of processor speeds until 64-bits was achievable and marketable.

Wait did I just say “marketable”?

Well here’s the thing about Moore’s law, if everything is getting smaller and doubling in speed then why is it I can only get a 4GHz Intel i7 processor, and 512-bit Gen 8 console? Shouldn’t things be way faster? Here is one way of thinking about the implications of Moore’s Law. If you have enough money you can go out today and by yourself a car capable of doing 200+ miles an hour. Of course then you have to ask the question does this car get you to work any faster? I mean sure you look cool, but…..? When you get down to it whether it’s a Ferrari, or a Dodge Caravan there is only so fast you can go to get to work, since you have to consider that there are speed laws, and traffic lights, and other cars on the road. So 200+ miles an hour means nothing in a real world where things move at a maximum speed of 55 MPH, and average about 35 MPH.

In the world of computer programming and video game development it’s basically the same issue, since programs are only designed to work at particular speeds, as is the integrated circuitry that the processors, RAM, and graphics cards will hook into. In a computer science lab a 10GHz processor can exist, but it will take circuit board designers and computer programmer’s years to deliver on the promises such a microprocessor can make, and by that time a 20 or 40GHz processor will be out.

In some ways this is why I believe 32-bit was almost a non-factor in video game console development. The technology must have fallen hot on the heels of 16-bit system releases, however trying to give it some time manufacturers choose to let 16-bit live a little bit, and opted to wait for 64-bit technology so that a marketable period of time existed before pushing another system on the general public. In a way this is were Sega messed up pushing the 32x out the door shy of the 64-bit Saturn release.

Of course when it comes to PC’s and video game consoles Moore’s Law is almost immaterial now days as we wait for the programs and hardware to catch up. Moore’s Law is extremely evident in our mobile technology, for instance the maximum 64GB of the iPhone 5 released in late 2012 is going to be surpassed by the 128GB of the iPhone 6 released this year only two years later. In a way this is a clear and current example of Moore’s Law being in effect as the phone doubles in memory capability within a two year period. Of course the shift from standard PC’s and laptops, to phones and tablets has changed the emphases of micro-component development, as the smaller technologies call for an even smaller and more powerful data infrastructure.

So as you take a look at your collection of consoles today, and even the cell phone in your pocket be sure to remember the meaning of Moore’s Law behind all of it.